Ongoing Projects

Multi-modal stroage in a dynamic, distributed working memory network

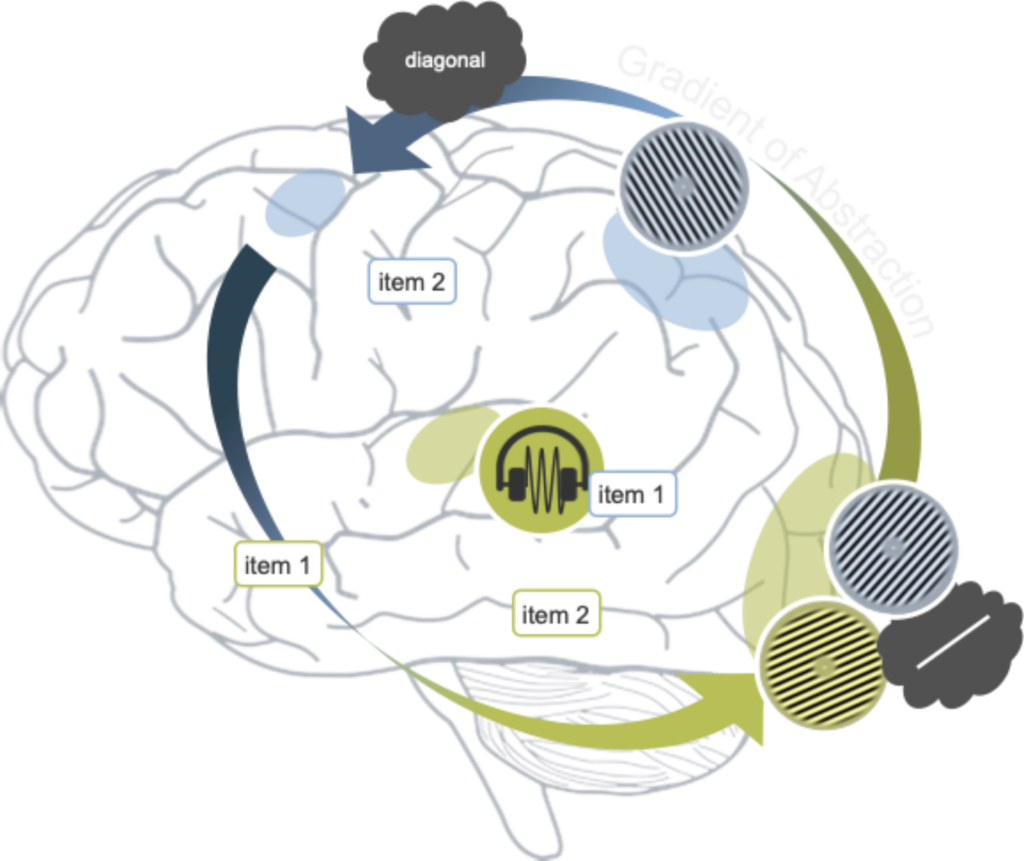

The question where our working memories are stored does not have to have a definite, unitary answer. We know that many parts of the human brain can represent memorized contents – but how are these representations utilized and how do they interact? Vivien Chopurian and Zhiqi Kang joined the lab in 2021 to investigate whether short-term memories for visual and auditory contents are dynamically allocated to minimize interference. It might be easy to remember two very different contents (e.g., a sound and an image). When remembering similar contents (e.g., two images), however, the neural representations of these contents might interfere with each other, making it harder to arbitrate between them. In order to minimize such interference, these neural representations for different images could be allocated to different parts of the brain. Vivien will present some early results at VSS 2022.

The different neural codes for working memory

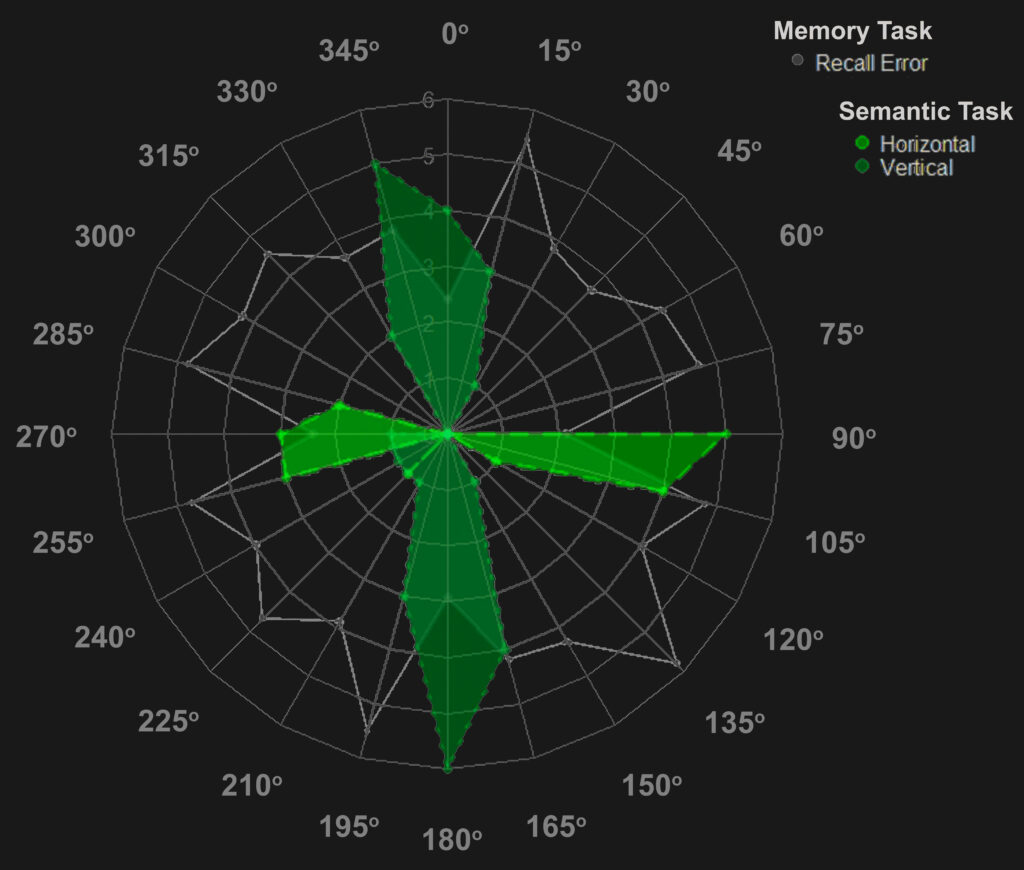

In a second line of research, we investigate the neural codes and strategies used during working memory. We recently submitted a preprint demonstrating that different brain regions use different neural codes to represent memorized contents (work with Chang Yan, Carsten Allefeld, and John-Dylan Haynes). We showed that regions of the ventral stream (areas V4 and V01) use a categorical mnemonic code during working memory, meaning that the colors are retained not as their original hues (its unique mixture of red, green and blue) but rather as belonging to rather broad categories (like ‘red’, ‘green’ and ‘blue’). We found no such benefit in sensory areas. This insight helps us to understand why different regions are used differentially during working memory. Joana Seabra and Andreea-Maria Gui joined the lab this year to dive deeper into this question. In particular, they want to find out what role is played by verbal neural representations and strategies while we memorize locations and orientations. When describing spatial properties (like ‘left’,’right, ‘horizontal’ and ‘diagonal’), can our word usage explain the errors we make during visual working memory? We are also investigating how verbal distractors affect visual working memory.

- Here is our color categorization paper (tweeprint summary, here; talk, here)

- Click here and here for Joana’s posters for VSS 2022 & 2023

- Andreea’s poster for VSS 2023 is here

Machine learning methods for reading overlapping brain signals

The central tools for our research are statistical and machine learning methods to identify neural representation of working memories and understand how they relate to behavior. A critical challenge for these tools is the overlap and interaction of multiple representations. How can we independently investigate these representations even if they are represented in the same region and how could representations of one item change if another is around? Thomas collaborated with Polina Iamshchinina, Surya Gayet, and Rosanne Rademaker on a commentary on pattern analysis for overlapping representations. We showed how analysis choice for discriminating overlapping representations, such as the selection of an appropriate training set (training on sensory or different mnemonic data) can greatly affect results. Currently, we are developing tools that allow us to quickly evaluate the effect of such choices across a large space of possible design and analyses approaches.

· Iamshchinina, P., Christophel, T. B., Gayet, S., & Rademaker, R. L. (2021). Essential considerations for exploring visual working memory storage in the human brain. Visual Cognition, 29(7), 425–436. (here)